As weather patterns grow increasingly erratic, precise forecasting has become a crucial defense in our battle against nature’s unpredictable forces. However, traditional methods are finding it challenging to adapt to the complexities of our planet’s ever-changing climate. This is precisely where High-Performance Computing (HPC) steps onto the stage, ushering in a new era of forecasting characterised by enhanced accuracy and efficiency.

HPC serves as the computational muscle behind modern weather forecasts, transforming equations into vast networks of interconnected data. Supercomputers within HPC clusters process astronomical datasets, simulating atmospheric conditions with unprecedented detail. They have the capability to:

- Process real-time data: HPC continuously ingests data from various sources, including satellites and ground stations, providing a constant stream of observations to enhance weather models. This real-time data integration ensures that forecasts are based on the most up-to-date information available.

- Run high-resolution simulations: Unlike traditional models, HPC allows for simulations that capture fine details such as air currents, storm cloud dynamics, and local microclimates, resulting in highly accurate forecasts down to specific neighbourhoods. This high-resolution capability enables a more nuanced understanding of weather patterns.

- Accelerate research and development: HPC is not limited to forecasting alone; it plays a crucial role in scientific discovery. Researchers leverage HPC to test new models, gain insights into climate change, and develop strategies for a sustainable future. The computational power of HPC accelerates the pace of advancements in weather science, contributing to our understanding of climate dynamics and potential mitigation strategies.

The accuracy advantage provided by HPC stems from its collaboration with sophisticated weather models, offering:

- Finer-grained detail: Traditional models often struggle with localised nuances, providing broad forecasts. HPC empowers models to capture subtle variations in terrain, temperature, and humidity, painting a more accurate picture of the weather.

- Ensemble forecasting: HPC runs multiple simulations with slight variations, producing a range of possible outcomes. This approach offers a clearer picture of the inherent uncertainty in weather forecasts.

- Advanced predictive capabilities: HPC-powered models can predict extreme weather events, from hurricane paths to heatwave intensities, providing communities with crucial time to prepare and mitigate potential impacts.

Looking ahead, the future of weather forecasting is closely tied to HPC advancements, promising:

- Personalised weather forecasts: Advancements in HPC could lead to more localised and personalized forecasts, offering specific details such as microclimate conditions for individual streets. This personalised approach enhances the relevance of weather information for individuals and improves decision-making.

- Proactive disaster management: Deeper insights into extreme weather patterns, facilitated by HPC services, enable communities to anticipate and prepare for floods, droughts, and heatwaves, minimising damage and saving lives. The proactive use of HPC-driven forecasts enhances disaster preparedness and response strategies.

- A sustainable future: HPC-powered climate models contribute valuable information for formulating policies and strategies to combat climate change, mitigating its impact and fostering a sustainable future. The integration of HPC services in climate research aids in the development of informed policies that address environmental challenges and promote long-term sustainability.

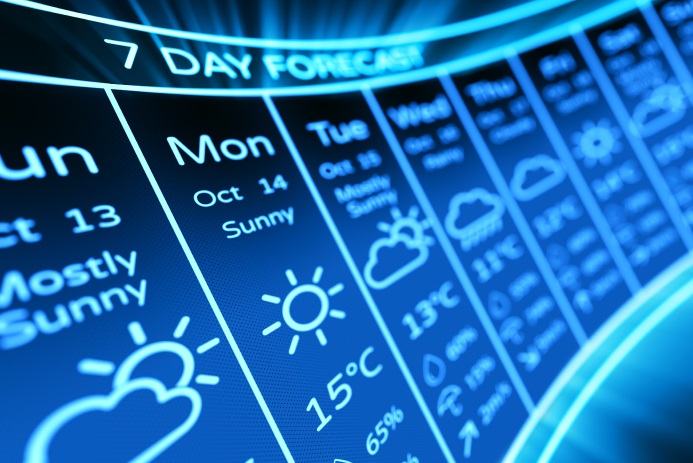

HPC is shaping the future of weather forecasting and contributing to a more resilient and sustainable future for all. The next time you check your weather app, consider the intricate process of supercomputers within HPC clusters, processing data to provide accurate forecasts that guide our lives and prepare us for any future challenges.

Yotta HPC as a Service enhances AI, ML, and Big Data projects with advanced GPUs in a Tier IV data center, offering supercomputing capabilities, extensive storage, optimised network, and scalability at a fraction of the cost compared to establishing an on-premise High Compute environment. Users can access on-demand dedicated GPU compute instances powered by NVIDIA, deploy workloads swiftly without CAPEX investment, and enjoy a scalable HPC infrastructure on flexible monthly plans, all within a comprehensive end-to-end environment.