While hyperscale data centers are already changing the way OTT players operate, the adoption of Blockchain will be a real game-changer for the sector.

Coronavirus is one word that took the world by storm. Literally, panic is in abundance; public transport is shut, and work from home is a norm these days. However, there is something else that is gaining popularity amongst those stuck at home in these times of crisis – OTT media platforms like Netflix, Amazon, Disney TV, Hotstar, etc. The OTT trend has picked up so much during the pandemic times that Nielsen has predicted a 60% increase in that online streaming making it necessary for players like Netflix and Amazon Prime, amongst others, to adjust their business strategies.

Thanks to deep internet penetration, cheap data, and exciting content, video consumption has been on a growth trajectory in India for some time now. The latest BCG-CII report indicates that the average digital video consumption in India has increased more than double to 24 minutes per day from 11 minutes over the past two years. As the report rightly points out, the rise in these numbers are also because of the increase of the OTT players in the country.

Over-the-top world view

There is no doubt about the fact that OTT technologies have disrupted the Indian entertainment landscape. Subscription-based, on-demand OTT platforms like Netflix, Hotstar, and Amazon Prime are slowly and steadily becoming the preferred medium of entertainment for modern Indians.

The shift in viewer sensibilities has propelled the growth of the country’s OTT industry. As per a Boston Consulting Group report, the Indian OTT market, which is currently valued at USD 500 million is expected to reach USD 5 billion by 2023. The television sets are also now becoming smarter. They are now catering to the needs of these OTT technologies by making their content available in a high-quality viewing experience. No wonder that the India television market is projected to surpass USD13 billion by 2023, led by these new breeds of Smart TVs on the block.

What powers the OTT?

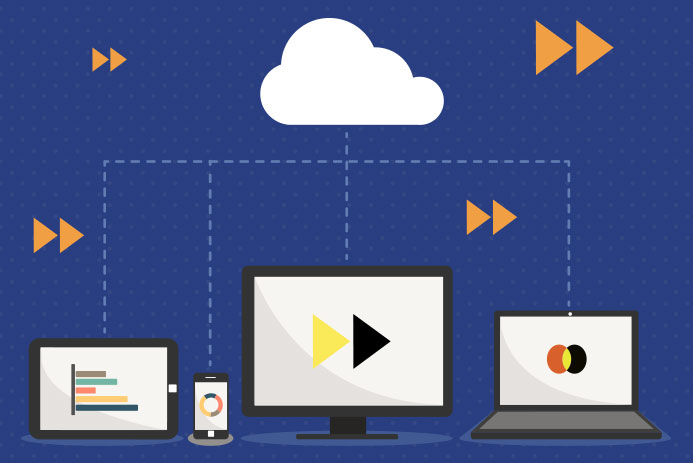

CDN or the content delivery network is the infrastructure through which OTT content is delivered to the end customer. Simply put, CDN is hosting the original content – video, picture, etc. – on a central server and then sharing it remotely through caching and streaming servers located across the globe. Hence, a relevant network capacity planning feature built into the CON is required to monitor network traffic and plan the capacity increase ahead of time.

Video storage on the cloud

Video files are unusually large. To compress them on the fly and stream them on-demand to hundreds of millions of people with high resolution and minimal latency requires blazingly fast storage speed and bandwidth. It is a technological nightmare. With the growing quantity and sophistication of OTT video content, there is more traffic, more routing, and more management across the CDNs.

The OTT players typically rent space in the cloud to store their data. As their content keeps expanding and setting up the infrastructure means huge capex, there is a need for a high level of expertise in this ever-changing and updating technology landscape. Hence, going to a third-party service provider makes complete sense. This makes life extremely simple for everyone as the only thing that now needs to be done is for the user to ask for a specific file to be played, and the video player, in turn, will ask its content delivery network or CON to fetch the desired content from the cloud.

Need for speed

The need for speed, scalability and network latency are driving OTT players towards Hyperscale Data Center service providers. They need all three in major proportions and 24x7x365 days, without a glitch even during or rather more during times of crisis like the current COVID-19 situation. Since the current and future demand of these players cannot be fulfilled by traditional data center players, they need are hyperscale data centers that can scale up the provisioning of computing, storage, networking, connectivity, and power resources, on-demand.

These data centers are designed and constructed on large land banks with expansion in mind. Also, they are created with the idea of absolute agility. Something highly desired by the OTT players. The OTT players are looking for service providers who can quickly increase the bandwidth and the storage capacity during high streaming and downgrade during slow times.

Redundant connectivity, local internet exchange and national exchange connectivity are also some of the things that an OTT player looks for in a data center and will find it more easily along with everything mentioned above in a hyper-scale facility.

Recently Spotify, the Swedish streaming giant had to shell out USO 30 million in a settlement over a royalty claim by an artist. With Blockchain, you can deploy a smart contract as well as it can be used to store a cryptographic hash of the original digital music file. The hash associates the address and the identities of the creator.

Another trend that will be a game-changer for this industry is SG. With SG, the next generation of networks will be able to cope better with running several highdemand applications like VR and AR. This will change the way content is developed and looked at on OTT platforms. It will also, however, make the role of hyperscale data center more critical. The networks will ultimately be in them, and they will be the actual load bearers of it all.