Organisations are increasingly embracing a multi-cloud strategy to drive agility, mitigate risks, and enhance performance. A multi-cloud approach, which involves leveraging two or more cloud service providers (CSPs), offers flexibility, resilience, and freedom from vendor lock-in. As businesses navigate this complex environment, Cloud Managed Service Providers (MSPs) have emerged as strategic enablers, helping enterprises maximise the benefits of multi-cloud while minimising its challenges.

The Shift Towards Multi-Cloud Environments

Traditionally, businesses relied on a single cloud vendor to host their workloads, data, and applications. However, as operations become more global, digital, and compliance-driven, the drawbacks of a single-vendor dependency, like limited customisation, regional outages, and pricing inflexibility, have become apparent.

According to Flexera’s 2024 State of the Cloud Report, 93% of enterprises have implemented a multi-cloud approach, with 87% embracing hybrid cloud models that combine both public and private cloud services. This shift is driven by the need for enhanced flexibility, risk mitigation, and performance optimisation.

Enter the multi-cloud strategy.

With multi-cloud, organisations can:

- Distribute workloads across different CSPs (e.g., AWS, Azure, Google Cloud) for optimal performance.

- Mitigate downtime risks by avoiding reliance on one provider.

- Leverage the best-in-class services from different vendors (e.g., Google’s AI/ML capabilities, Azure’s enterprise integrations).

- Comply with regional data regulations by hosting data across multiple geographies.

Yet, managing a multi-cloud environment is no small feat—it introduces complexity in operations, security, and cost management. This is where Cloud MSPs play a crucial role.

How Cloud MSPs Empower Multi-Cloud Success

Cloud Managed Service Providers act as trusted partners that design, deploy, and manage multi-cloud architectures tailored to specific business needs. Here’s how they empower organisations with flexibility and vendor independence:

- Simplified Cloud Management: MSPs unify the management of disparate cloud platforms under a single pane of glass. They provide tools and dashboards that give visibility into usage, performance, and costs across cloud environments. This consolidation ensures businesses don’t need separate teams or tools for each cloud provider.

- Workload Optimisation & Portability: One of the biggest advantages of multi-cloud is the ability to run the right workload on the right cloud. MSPs assess application requirements and help businesses map them to the ideal cloud platform, optimising performance, and cost. Moreover, they enable workload portability—helping businesses move applications or data between clouds without re-architecting. This significantly reduces vendor lock-in and enhances operational agility.

- Security & Compliance: Multi-cloud security can be complex due to varying security models and compliance standards across providers. MSPs bring in standardised security practices, continuous monitoring, and threat intelligence. They also ensure alignment with industry regulations like GDPR, HIPAA, or India’s Data Protection Bill.

- Disaster Recovery & High Availability: MSPs design resilient architectures using multiple clouds to ensure redundancy and failover mechanisms. In case of an outage in one cloud, operations can shift seamlessly to another, ensuring uninterrupted service and business continuity.

- Cost Optimisation: Cloud sprawl is a common issue in multi-cloud setups. MSPs monitor resource utilisation, eliminate redundancies, and suggest cost-saving opportunities. Through rightsizing, reserved instances, and consumption insights, businesses can stay on budget without compromising performance.

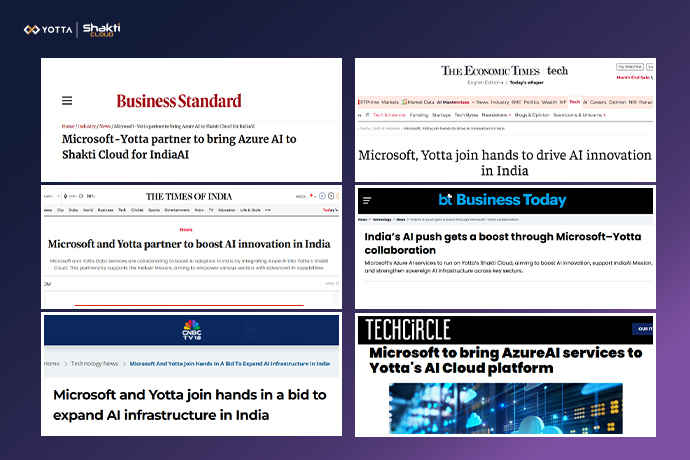

Yotta: Driving Multi-Cloud Excellence in India

As a leading digital transformation and cloud services provider, Yotta is playing a pivotal role in helping Indian enterprises transition seamlessly to multi-cloud environments. With its robust ecosystem of data centers, cloud platforms, and managed services, Yotta offers businesses a vendor-agnostic and scalable foundation for cloud adoption. However, managing multiple cloud environments can introduce complexities in integration, security, and operations.

Yotta addresses these challenges through its Hybrid and Multi Cloud Management Services, offering a unified platform that seamlessly integrates private, public, hybrid, and multi-cloud environments. By providing a single-window cloud solution, Yotta simplifies cloud management, enhances scalability, and ensures robust security across diverse cloud infrastructures. This comprehensive approach empowers enterprises to manage their IT resources efficiently, adapt to evolving business needs, and drive digital transformation initiatives.

Here’s what sets Yotta apart:

- Interoperability with major cloud providers.

- Expert-led migration and deployment support.

- End-to-end managed services including security, monitoring, and governance.

- Localised data centers that comply with India’s data residency regulations.

Whether you’re a large enterprise or a fast-growing startup, Yotta ensures that your multi-cloud journey is efficient, secure, and aligned with business goals.

Conclusion: The future of multi-cloud offers the agility and resilience that modern enterprises need to stay competitive. However, to harness its full potential, organisations must overcome operational and technical complexities.

That’s where Yotta come in bridging the gap between strategy and execution and delivering a cloud experience that is secure and truly vendor-independent.

By partnering with the right MSP, businesses can turn multi-cloud from a complex challenge into a strategic advantage.